Research

Auditory and Music Development |

LIVELab Studies |

Wellness and Music |

Funding |

Auditory and Music Development

Interactions between caregivers and infants: Coordination of gaze, blinking, and facial expressions during singing and speech

All over the world, mothers (or primary caregivers) communicate with their infants through both infant-directed (ID) singing and ID speech, and infants coordinate their behavior in response. However, less is known about how infants influence their caregivers’ behavior. In this research, we are exploring two-way coordination between caregivers and infants, as this interactive "dance" seems to be crucial for infant learning and social bonding. We are also interested in why caregivers both talk and sing to infants, examining how coordination may differ during ID singing and ID speech, and how it might change over the first year as infants begin to learn language. We have developed a new method for simultaneously tracking where on each other's face the mother and infant are looking during interactions. One finding so far shows that, unlike in adult conversations, mothers are least likely to blink at phrase boundaries in their ID speech and singing. Infants, on the other hand, are most likely to blink just before their mother’s phrase boundaries, showing a complex coordination of visual attention that is guided by the rhythm of speech and music. We are currently analyzing gaze locations (eyes vs. mouth) and facial expressions of emotion to (1) examine what happens at specific times, such as beat or phrase onsets in ID speech and music, and (2) use mathematical modeling to measure how each person’s looking behavior and emotional expressions influence the other over time. Stay tuned for results from this study!

All over the world, mothers (or primary caregivers) communicate with their infants through both infant-directed (ID) singing and ID speech, and infants coordinate their behavior in response. However, less is known about how infants influence their caregivers’ behavior. In this research, we are exploring two-way coordination between caregivers and infants, as this interactive "dance" seems to be crucial for infant learning and social bonding. We are also interested in why caregivers both talk and sing to infants, examining how coordination may differ during ID singing and ID speech, and how it might change over the first year as infants begin to learn language. We have developed a new method for simultaneously tracking where on each other's face the mother and infant are looking during interactions. One finding so far shows that, unlike in adult conversations, mothers are least likely to blink at phrase boundaries in their ID speech and singing. Infants, on the other hand, are most likely to blink just before their mother’s phrase boundaries, showing a complex coordination of visual attention that is guided by the rhythm of speech and music. We are currently analyzing gaze locations (eyes vs. mouth) and facial expressions of emotion to (1) examine what happens at specific times, such as beat or phrase onsets in ID speech and music, and (2) use mathematical modeling to measure how each person’s looking behavior and emotional expressions influence the other over time. Stay tuned for results from this study!

PhD student researcher: Sara Ripley

Music, speech, and rhythm in infants born prematurely

When we listen to speech, music, or other sounds, we use rhythm to organize the information and help us understand what we hear. The ability to perceive and distinguish rhythms is present in early infancy and continues to develop throughout childhood. Music and rhythm also play an important role in helping infants and caregivers bond and learn socially. Infants born prematurely are at risk for various developmental challenges, including delayed language and speech development. Given the importance of rhythm in developing language and social skills, we are investigating how premature infants process rhythms. We are using EEG and questionnaires to assess how their brains respond to rhythms and how this relates to their language and cognitive development. Additionally, since premature birth can affect infants’ gut bacteria (microbiome), we are also studying the relationship between infants’ gut bacteria and their cognitive outcomes to better understand how the gut and brain interact.

When we listen to speech, music, or other sounds, we use rhythm to organize the information and help us understand what we hear. The ability to perceive and distinguish rhythms is present in early infancy and continues to develop throughout childhood. Music and rhythm also play an important role in helping infants and caregivers bond and learn socially. Infants born prematurely are at risk for various developmental challenges, including delayed language and speech development. Given the importance of rhythm in developing language and social skills, we are investigating how premature infants process rhythms. We are using EEG and questionnaires to assess how their brains respond to rhythms and how this relates to their language and cognitive development. Additionally, since premature birth can affect infants’ gut bacteria (microbiome), we are also studying the relationship between infants’ gut bacteria and their cognitive outcomes to better understand how the gut and brain interact.

PhD student investigator: Maya Psaris

What kinds of rhythms make infants and children dance?

Dancing to music is found in every human culture. Dancing together at events like weddings, parties, and religious ceremonies increases bonds between people. Rhythm is the key musical element that tells us how to move, by providing a steady and predictable beat that we can easily synchronize our movements to. However, adding some complexity and surprise—known as syncopation in music—can make rhythms even more enjoyable to move to. Does a preference for some complexity in rhythms develop as we become more familiar with music, or is it a basic part of how our brains process rhythm? We have been exploring this question through a series of developmental studies, testing whether children and infants show the same preference for complex rhythms as adults do. In one study, we found that children aged 3–6 moved more during a dance task when listening to rhythms with moderate complexity compared to simpler rhythms, and they also chose those rhythms as "better for dancing" in a listening task. We used the same rhythms in a follow-up study with infants aged 6–18 months and found that they also preferred moving to more complex rhythms. We are currently using a touchscreen task to test whether infants, when given control over what they hear, choose complex rhythms more often than simple ones.

Dancing to music is found in every human culture. Dancing together at events like weddings, parties, and religious ceremonies increases bonds between people. Rhythm is the key musical element that tells us how to move, by providing a steady and predictable beat that we can easily synchronize our movements to. However, adding some complexity and surprise—known as syncopation in music—can make rhythms even more enjoyable to move to. Does a preference for some complexity in rhythms develop as we become more familiar with music, or is it a basic part of how our brains process rhythm? We have been exploring this question through a series of developmental studies, testing whether children and infants show the same preference for complex rhythms as adults do. In one study, we found that children aged 3–6 moved more during a dance task when listening to rhythms with moderate complexity compared to simpler rhythms, and they also chose those rhythms as "better for dancing" in a listening task. We used the same rhythms in a follow-up study with infants aged 6–18 months and found that they also preferred moving to more complex rhythms. We are currently using a touchscreen task to test whether infants, when given control over what they hear, choose complex rhythms more often than simple ones.

Postdoc research: Daniel Cameron

Cameron, D. J., Caldarone, N., Psaris, M., Carrillo, C., & Trainor, L. J. (2023). The complexity‐aesthetics relationship for musical rhythm is more fixed than flexible: Evidence from children and expert dancers. Developmental Science, 26(5), e13360-n/a. https://doi.org/10.1111/desc.13360

The influence of musical movement on infants' social behaviour

Trainor, LJ and Cirelli, L (2015). Rhythm and interpersonal synchrony in early social development. Ann N Y Acad Sci, 1337, 45-52 View Abstract Full text PDF

Tracking two tones at once, at 3 and 7 months

The purpose of this study was to examine how polyphonic music and simultaneous sounds are encoded in the brain during development. Polyphonic music contains multiple melodic lines (referred to as "voices") which are often separated in pitch range and are equally important to the music. Because each voice is important, it is crucial for individuals to be able to separate and simultaneously analyze the individual melodies. In adults, we used Electroencephalography (EEG) to show that the brain is able to process the voices of a polyphonic melody in separate memory traces. Interestingly, we also found that adult brains process the higher pitched voice better than the lower pitched voice. We wanted to know how 7-month-old infants process simultaneous sounds. We presented two sounds as a repeating standard and occasionally modified the pitch of one tone. We found that at 7 months, infants showed very similar responses to adults. They are able to process each tone separately, and they already show better processing of the high voice than the low voice. We also found an association between amount of music listening at home and quality of simultaneous sound processing: the more they listened to music at home, the better their brain was able to process this polyphonic music. We are now extending this study to 3-month-old infants who have had much less music exposure. We hope to learn (1) when the ability to process each tone separately appears, and (2) if humans have an inherent preference for the high voice, or whether this is something learned through exposure to Western music.

The purpose of this study was to examine how polyphonic music and simultaneous sounds are encoded in the brain during development. Polyphonic music contains multiple melodic lines (referred to as "voices") which are often separated in pitch range and are equally important to the music. Because each voice is important, it is crucial for individuals to be able to separate and simultaneously analyze the individual melodies. In adults, we used Electroencephalography (EEG) to show that the brain is able to process the voices of a polyphonic melody in separate memory traces. Interestingly, we also found that adult brains process the higher pitched voice better than the lower pitched voice. We wanted to know how 7-month-old infants process simultaneous sounds. We presented two sounds as a repeating standard and occasionally modified the pitch of one tone. We found that at 7 months, infants showed very similar responses to adults. They are able to process each tone separately, and they already show better processing of the high voice than the low voice. We also found an association between amount of music listening at home and quality of simultaneous sound processing: the more they listened to music at home, the better their brain was able to process this polyphonic music. We are now extending this study to 3-month-old infants who have had much less music exposure. We hope to learn (1) when the ability to process each tone separately appears, and (2) if humans have an inherent preference for the high voice, or whether this is something learned through exposure to Western music.

Marie, C and Trainor, LJ (2013). Development of simultaneous pitch encoding: infants show a high voice superiority effect. Cereb Cortex, 23(3), 660-9 View Abstract Full text PDF

Internalized timing of isochronous sounds Is represented in neuromagnetic beta oscillations

Moving in synchrony with an auditory rhythm requires predictive action based on neurodynamic representation of temporal information. Although it is known that a regular auditory rhythm can facilitate rhythmic movement, the neural mechanisms underlying this phenomenon remain poorly understood. In this experiment using human magnetoencephalography, 12 young healthy adults listening passively to an isochronous auditory rhythm without producing rhythmic movement. We hypothesized that the dynamics of neuromagnetic beta-band oscillations (20 Hz) which are known to reflect changes in an active status of sensorimotor functions—would show modulations in both power and phase-coherence related to the rate of the auditory rhythm across both auditory and motor systems. Despite the absence of an intention to move, modulation of beta amplitude as well as changes in cortico-cortical coherence followed the tempo of sound stimulation in auditory cortices and motor-related areas including the sensorimotor cortex, inferior-frontal gyrus, supplementary motor area, and the cerebellum. The time course of beta decrease after stimulus onset was consistent regardless of the rate or regularity of the stimulus, but the time course of the following beta rebound depended on the stimulus rate only in the regular stimulus conditions such that the beta amplitude reached its maximum just before the occurrence of the next sound. Our results suggest that the time course of beta modulation provides a mechanism for maintaining predictive timing, that beta oscillations reflect functional coordination between auditory and motor systems, and that coherence in beta oscillations dynamically configure the sensorimotor networks for auditory-motor coupling.

Is speech easier for infants to track if it is sung?

In recent work, Dr. Christina Vanden Bosch der Nederlanden measured EEG to show that adults’ brains are better at tracking speech syllables when a sentence is sung compared to when that same sentence is spoken. In past work, we have shown that when parents and caregivers talk to infants, they intuitively exaggerate their speech (e.g., use large pitch contours and regular rhythms), and that this “musical” speech helps to regulate their infants’ emotions, maximize social engagement, and to aid in the difficult task of language learning. In the present study, we are investigating whether music can help infants to learn language. Specifically, we are using electroencephalography (EEG) to tell whether babies' brains are better at tracking word segments, like syllables, when infant-direct utterances are sung versus when they are spoken.

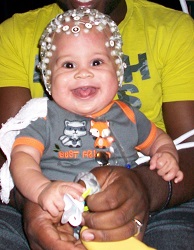

So far, 30 4-month-old infants have listened to sentences from the children’s book series “George and Martha” (including their stories about how George hates Martha's Split Pea Soup!) spoken and sung in an infant-directed manner while we measured their EEG brain responses (see our adorable junior scientist pics of infants wearing our comfortable saline-soaked EEG nets!). We are analyzing how well each infant’s brain tracked the speech syllables in the sung versus spoken versions. Later, as each child reaches their first birthday, we will collect a list of words that they understand and that they can say themselves (MacArthur-Bates Communicative Development Index), to see how their neural tracking at 4-months of age relates to their emerging language at 12 months. This study will tell us whether sung speech helps infants to track syllables in language. It will also give us an early neural marker of speech tracking before infants know any words. If we can identify infants at 4 months who show poor speech tracking, and are at risk for dyslexia, we may be able to use music in early infancy to help their language develop at a time when the brain is most plastic. In the meantime, we hope our babies enjoyed listening to all the tales of George and Martha -- from Split Pea Soup to Mexican Jumping Beans!

Post Doc Investigator: Christina Vanden Bosch der Nederlanden

Vanden Bosch der Nederlanden, C. M., Joanisse, M. F., & Grahn, J. A. (2020). Music as a scaffold for listening to speech: Better neural phase-locking to song than speech. NeuroImage, 214, 116767. https://doi.org/10.1016/j.neuroimage.2020.116767

Learning about musical rhythm and timing

When we listen to music, speech, and other auditory information, we use the timing of events to help organize what we hear. For example, speech is organized into groups of syllables that form words, and strings of words that make sentences. Music uses timing information too, in the form of beat and rhythm. The beat of music is the steady pulse that we can tap our feet to. Although almost every kind of music has an underlying beat, the music of different cultures group musical beats together in different ways. North American music tends to group beats into repeated groups of two, like a march, or three, like a waltz. However, in other cultures, music is often grouped into fives, sevens, or other more complex combinations of beats. These complex groupings are easy to understand for people who grow up in a musical culture that uses them, but difficult for those of us who didn't. However, we don't know at what age this becomes true, or why it would be helpful for us to specialize our listening abilities this way. In this study, we wanted to find out if 5-year-old children show the same kind of specialization that adults do. We also wanted to know if these rhythmic skills are related to other abilities that also use timing (like language and reading), or abilities involved in listening to sequences and copying them back (memory and motor skills). Early results suggest that 5-year-olds are, in fact, better at listening to and drumming back music that uses culturally familiar groupings, compared to more unfamiliar groupings. Additionally, children who have bigger vocabularies and better pre-reading skills often perform better on the musical rhythm tasks. Since both of these tasks require children to pay attention to timing information, it is possible that children who are more sensitive to timing information in both speech and music have an advantage in these areas.

When we listen to music, speech, and other auditory information, we use the timing of events to help organize what we hear. For example, speech is organized into groups of syllables that form words, and strings of words that make sentences. Music uses timing information too, in the form of beat and rhythm. The beat of music is the steady pulse that we can tap our feet to. Although almost every kind of music has an underlying beat, the music of different cultures group musical beats together in different ways. North American music tends to group beats into repeated groups of two, like a march, or three, like a waltz. However, in other cultures, music is often grouped into fives, sevens, or other more complex combinations of beats. These complex groupings are easy to understand for people who grow up in a musical culture that uses them, but difficult for those of us who didn't. However, we don't know at what age this becomes true, or why it would be helpful for us to specialize our listening abilities this way. In this study, we wanted to find out if 5-year-old children show the same kind of specialization that adults do. We also wanted to know if these rhythmic skills are related to other abilities that also use timing (like language and reading), or abilities involved in listening to sequences and copying them back (memory and motor skills). Early results suggest that 5-year-olds are, in fact, better at listening to and drumming back music that uses culturally familiar groupings, compared to more unfamiliar groupings. Additionally, children who have bigger vocabularies and better pre-reading skills often perform better on the musical rhythm tasks. Since both of these tasks require children to pay attention to timing information, it is possible that children who are more sensitive to timing information in both speech and music have an advantage in these areas.

Use of prosody and information structure in high functioning adults with Autism in relations to language ability

Abnormal prosody is a striking feature of the speech of those with Autism spectrum disorder(ASD), but previous reports suggest large variability among those with ASD. We showed that part of this heterogeneity can be explained by level of language functioning. We recorded semi spontaneous but controlled conversations in adults with and without ASD and measured features related to pitch and duration to determine (1) general use of prosodic features, (2) prosodic use in relation to marking information structure, specifically, the emphasis of new information in a sentence (focus) as opposed to information already given in the conversational context (topic), and (3) the relation between prosodic use and level of language functioning. We found that, compared to typical adults, those with ASD with high language functioning generally used a larger pitch range than controls but did not mark information structure, whereas those with moderate language functioning generally used a smaller pitch range than controls but marked information structure appropriately to a large extent. Both impaired general prosodic use and impaired marking of information structure would be expected to seriously impact social communication and thereby lead to increased difficulty in personal domains, such as making and keeping friendships, and in professional domains, such as competing for employment opportunities.

Becoming Musical Listeners Part 1: Was that a wrong note?

Adults who have never taken a music lesson can still typically tell which notes are wrong and which are right, even in a song that they have never heard before! This is because we gather a lot of knowledge about the music of our culture simply from listening to music even without any formal musical training. Two of the more sophisticated musical abilities that we acquire during childhood are sensitivity to key membership (knowing which notes and chords belong in a key and which do not) and harmony perception (knowing which notes and chords should follow others). While we know quite a lot about how children learn the rules of their native language, we don't know much about how they learn the rules of the music of their culture. In our study, we examined whether 4- and 5-year-olds could demonstrate any knowledge of key membership and harmony. We tested this question in two ways. In the first, we showed children videos of two puppets playing the piano: one played a song that followed the rules, and one played a song that contained either wrong notes or chords that went outside the key, or a note or chord that stayed in the key but was unexpected at that point. We asked children to make judgments about which puppet should get a prize for playing the best song. With this method, we found that 5-year-olds could demonstrate some understanding of key membership but not harmony, but 4-year-olds seemed to think every song sounded great, even the ones with wrong notes! Even though 4-year-olds couldn't show any explicit knowledge of key membership or harmony, we wondered whether their brains could register that there had been a wrong or unexpected note or chord even though they couldn't actually tell us that this had happened. To answer this question, we recorded EEG while children listened to the songs playing in the background and watched a silent movie to keep them entertained. We found that the pattern of brain activity was actually different in response to chords that were correct compared to out-of-key or unexpected chords. This means that even though 4-year-olds may not be consciously aware that a wrong note or chord has occurred, their brains still register the mistake. We are currently using the same method to see if 3-year-olds can show any knowledge of key membership and harmony. In addition, we are designing "easy" versions of these tasks, with many changes and more wrong notes. We want to know if children as young as 2 years of age can detect wrong notes if we give them a very simple and fun game to play (see next article).

Adults who have never taken a music lesson can still typically tell which notes are wrong and which are right, even in a song that they have never heard before! This is because we gather a lot of knowledge about the music of our culture simply from listening to music even without any formal musical training. Two of the more sophisticated musical abilities that we acquire during childhood are sensitivity to key membership (knowing which notes and chords belong in a key and which do not) and harmony perception (knowing which notes and chords should follow others). While we know quite a lot about how children learn the rules of their native language, we don't know much about how they learn the rules of the music of their culture. In our study, we examined whether 4- and 5-year-olds could demonstrate any knowledge of key membership and harmony. We tested this question in two ways. In the first, we showed children videos of two puppets playing the piano: one played a song that followed the rules, and one played a song that contained either wrong notes or chords that went outside the key, or a note or chord that stayed in the key but was unexpected at that point. We asked children to make judgments about which puppet should get a prize for playing the best song. With this method, we found that 5-year-olds could demonstrate some understanding of key membership but not harmony, but 4-year-olds seemed to think every song sounded great, even the ones with wrong notes! Even though 4-year-olds couldn't show any explicit knowledge of key membership or harmony, we wondered whether their brains could register that there had been a wrong or unexpected note or chord even though they couldn't actually tell us that this had happened. To answer this question, we recorded EEG while children listened to the songs playing in the background and watched a silent movie to keep them entertained. We found that the pattern of brain activity was actually different in response to chords that were correct compared to out-of-key or unexpected chords. This means that even though 4-year-olds may not be consciously aware that a wrong note or chord has occurred, their brains still register the mistake. We are currently using the same method to see if 3-year-olds can show any knowledge of key membership and harmony. In addition, we are designing "easy" versions of these tasks, with many changes and more wrong notes. We want to know if children as young as 2 years of age can detect wrong notes if we give them a very simple and fun game to play (see next article).

Becoming Musical Listeners Part 2: Learning about which notes belong in a musical key

When adults and older children hear an unfamiliar song, they can immediately point out when someone plays a "wrong note" or sings "out of key". How can they do this when they haven't heard the song before? How do they even know that the note is wrong? People learn about the structure of the language and musical systems in their environment just through everyday exposure. With more exposure, they become more sensitive to rules about which words can go together in sentences, and which notes go together in a musical key. This year, we tested 11- and 12-month-old infants' sensitivity to musical key structure. We created two versions of a piano Sonatina by Thomas Atwood. One version presented the music in the key of G major (tonal version) and the other version alternated every beat between G major and G-flat major (atonal version). To an adult, the atonal version sounds wrong or out-of-key, because it has no tonal centre and all 12 chromatic notes are present. However, every chord in this version is still consonant (for more information on consonance and dissonance, see the "Part 3" article next). Infants control how long they listen to the tonal and atonal versions. If they spend most of their time listening to the tonal version, we interpret this as a preference for that version. Interestingly, 12-month-old infants who participate in interactive music classes with their parents already show a preference for the tonal version. However, 12-month-olds who do not participate in such classes do not show this preference until later. We believe that the increased exposure to Western music in the music classes leads to earlier sensitivity to this aspect of musical structure. We are just beginning an exciting new study to find out when the majority of children will start to prefer the tonal over atonal version of a musical piece. In this study, 2-year-olds will play a game on coloured foam mats, controlling which version of the song plays based on where they move on the mats.

When adults and older children hear an unfamiliar song, they can immediately point out when someone plays a "wrong note" or sings "out of key". How can they do this when they haven't heard the song before? How do they even know that the note is wrong? People learn about the structure of the language and musical systems in their environment just through everyday exposure. With more exposure, they become more sensitive to rules about which words can go together in sentences, and which notes go together in a musical key. This year, we tested 11- and 12-month-old infants' sensitivity to musical key structure. We created two versions of a piano Sonatina by Thomas Atwood. One version presented the music in the key of G major (tonal version) and the other version alternated every beat between G major and G-flat major (atonal version). To an adult, the atonal version sounds wrong or out-of-key, because it has no tonal centre and all 12 chromatic notes are present. However, every chord in this version is still consonant (for more information on consonance and dissonance, see the "Part 3" article next). Infants control how long they listen to the tonal and atonal versions. If they spend most of their time listening to the tonal version, we interpret this as a preference for that version. Interestingly, 12-month-old infants who participate in interactive music classes with their parents already show a preference for the tonal version. However, 12-month-olds who do not participate in such classes do not show this preference until later. We believe that the increased exposure to Western music in the music classes leads to earlier sensitivity to this aspect of musical structure. We are just beginning an exciting new study to find out when the majority of children will start to prefer the tonal over atonal version of a musical piece. In this study, 2-year-olds will play a game on coloured foam mats, controlling which version of the song plays based on where they move on the mats.

Becoming Musical Listeners Part 3: What makes "nice sounds" sound nice to infants?

We combine notes in different ways to make music. Some combinations of notes (intervals) are called consonant: adult listeners (even those without any musical training) say that consonant intervals sound pleasant, good, smooth, in-tune, and correct. Other intervals are called dissonant, and listeners describe these intervals as unpleasant, rough, tense, out-of-tune, and incorrect. Six-month-old infants can detect an occasional dissonant interval in a set of consonant intervals, and infants as young as 2 months of age prefer to listen to consonant over dissonant intervals. In this study, we want to find out which aspects of musical sound contribute to this preference. In adults, researchers recently found that the aspect called harmonicity (how the different overtones or harmonics of a complex sound line up in frequency space) is closely related to perception of consonance. In the first study, we looked at harmonicity perception in 5- and 6-month-old infants. Infants sat on their parent's lap and controlled how long they listened to harmonic (perfectly lined up) or inharmonic (not-quite lined up) sounds over 20 trials by where they looked. The infants in this study looked much longer in order to hear the harmonic sounds, which we interpret as a preference for these sounds. These results show us that even at 6 months of age, infants are sensitive to the harmonicity aspect of consonance. In a second study, we are looking at another aspect of dissonance called beating. When we hear two tones that are very close together in frequency, we experience a rough or "beating" sensation. We can greatly reduce that sensation by taking these two tones and presenting one to the left ear and the other to the right ear. In order to figure out if infants prefer tones without beating, we are using headphones so that we can present two different sounds to each ear. Infants control which sounds they hear based on where they look on a computer monitor. Overall, so far we have discovered that infants show sensitivity to differences in harmonicity, which tells us that this aspect of musical sound is important for the perception of consonance and dissonance.

We combine notes in different ways to make music. Some combinations of notes (intervals) are called consonant: adult listeners (even those without any musical training) say that consonant intervals sound pleasant, good, smooth, in-tune, and correct. Other intervals are called dissonant, and listeners describe these intervals as unpleasant, rough, tense, out-of-tune, and incorrect. Six-month-old infants can detect an occasional dissonant interval in a set of consonant intervals, and infants as young as 2 months of age prefer to listen to consonant over dissonant intervals. In this study, we want to find out which aspects of musical sound contribute to this preference. In adults, researchers recently found that the aspect called harmonicity (how the different overtones or harmonics of a complex sound line up in frequency space) is closely related to perception of consonance. In the first study, we looked at harmonicity perception in 5- and 6-month-old infants. Infants sat on their parent's lap and controlled how long they listened to harmonic (perfectly lined up) or inharmonic (not-quite lined up) sounds over 20 trials by where they looked. The infants in this study looked much longer in order to hear the harmonic sounds, which we interpret as a preference for these sounds. These results show us that even at 6 months of age, infants are sensitive to the harmonicity aspect of consonance. In a second study, we are looking at another aspect of dissonance called beating. When we hear two tones that are very close together in frequency, we experience a rough or "beating" sensation. We can greatly reduce that sensation by taking these two tones and presenting one to the left ear and the other to the right ear. In order to figure out if infants prefer tones without beating, we are using headphones so that we can present two different sounds to each ear. Infants control which sounds they hear based on where they look on a computer monitor. Overall, so far we have discovered that infants show sensitivity to differences in harmonicity, which tells us that this aspect of musical sound is important for the perception of consonance and dissonance.

Hearing pitch: Timing codes in 4- and 8-month-old infants

Pitch perception is central to hearing music. Pitch is also important in other areas, such as attributing sounds to their appropriate sources, identifying a familiar voice, and extracting meaning from speech such as understanding whether a sentence was meant as a statement or a question. Past studies from our lab have shown that, even as infants, we have sophisticated auditory skills that allow us to make very accurate pitch judgments. Pitch is encoded in our brain in two codes, a temporal (timing) code and a spectral (frequency) code. Past research indicates that infants can use the frequency code. Here we investigated the development of the timing code. In order to do this, we needed to create sounds that contained timing but not frequency cues. Such sounds are referred to as iterated rippled noise (IRN) stimuli. First, we found out that 8-month-old infants could detect a change in the pitch of this type of sound using our conditioned head-turn method. We then moved to the EEG lab to examine the brain responses to a change in the pitch of IRN sounds. Here we found that 8-month-old infants showed a characteristic brain response to occasional pitch changes using IRN sounds. However, infants did not find these tasks easy! Unlike with sounds containing frequency information, when the infants were first exposed to IRN sounds, they could not tell if there was a change in pitch. Only after some experience and training did they show brain responses to pitch changes. We are currently examining whether or not 4-month-old listeners can also detect pitch changes in IRN sounds. In sum, these results suggest that the timing code for processing pitch in our brains is present but not well developed in young infants.

Pitch perception is central to hearing music. Pitch is also important in other areas, such as attributing sounds to their appropriate sources, identifying a familiar voice, and extracting meaning from speech such as understanding whether a sentence was meant as a statement or a question. Past studies from our lab have shown that, even as infants, we have sophisticated auditory skills that allow us to make very accurate pitch judgments. Pitch is encoded in our brain in two codes, a temporal (timing) code and a spectral (frequency) code. Past research indicates that infants can use the frequency code. Here we investigated the development of the timing code. In order to do this, we needed to create sounds that contained timing but not frequency cues. Such sounds are referred to as iterated rippled noise (IRN) stimuli. First, we found out that 8-month-old infants could detect a change in the pitch of this type of sound using our conditioned head-turn method. We then moved to the EEG lab to examine the brain responses to a change in the pitch of IRN sounds. Here we found that 8-month-old infants showed a characteristic brain response to occasional pitch changes using IRN sounds. However, infants did not find these tasks easy! Unlike with sounds containing frequency information, when the infants were first exposed to IRN sounds, they could not tell if there was a change in pitch. Only after some experience and training did they show brain responses to pitch changes. We are currently examining whether or not 4-month-old listeners can also detect pitch changes in IRN sounds. In sum, these results suggest that the timing code for processing pitch in our brains is present but not well developed in young infants.

Monkeying around: Infants' ability to tell apart Human voices and Monkey voices

The ability to tell the difference between two individuals is very important for everyday social interaction. When someone cannot be seen, as over a telephone, a useful way for a listener to identify them is by the sound of their voice. Adults are specialized for human voices. They are much better at telling apart two voices from their own (human) species than they are at telling apart two voices from a foreign (monkey) species. Here we investigated whether people learn to be so good at human voices through a lot of experience with human voices, or whether we are simply born better at processing human voices. The purpose of Study 1 was to test whether specialization for human voices develops during infancy. Six- and twelve-month-old infants came to the lab, and were seated on their parent's lap facing the researcher. The infants listened to the voice of one human or monkey female and were taught to turn their head to the side every time the voice of a second female of the same species was presented. Correct head turns were rewarded with a dancing toy! Using this method we found that infants of both ages turned their heads more often when there was a change in speaker than when there was no change, indicating that they were able to tell the difference between the two speakers for both monkey and human voices. However, 12-month-olds showed better discrimination for human compared to monkey voices, while 6-month-olds were equally good at both species. This shows that between 6 and 12 months of age infants become increasingly specialized for discriminating voices in their own species. This specialization for human voices likely occurs because infants experience many human voices in their daily environment, but no monkey voices. In Study 2, we tested whether experience is driving the effects we saw in the first study. So we gave 12-month-old infants experience with monkey voices, and then repeated the discrimination tests of Study 1. Specifically, we gave parents a book and CD in the form of a narrated storybook called "Beach Day for the Monkey Family" in which infants heard the monkey voices of a "father", "mother", "sister" and "brother" monkey. We found that these infants were better at telling apart new monkey voices than the infants in Study 1. This result indicates that older infants lose the ability to tell apart monkey voices because they are not exposed to these voices in their environment, and that by providing this exposure, their ability to discriminate monkey voices can be recovered. Discrimination of people by their voices is important for social interaction, recognizing familiar people and forming friendships. Together, these two studies show us that development of good discrimination for human voices depends on experience hearing human voices. To hear some sound clips from the experiment, click here.

The ability to tell the difference between two individuals is very important for everyday social interaction. When someone cannot be seen, as over a telephone, a useful way for a listener to identify them is by the sound of their voice. Adults are specialized for human voices. They are much better at telling apart two voices from their own (human) species than they are at telling apart two voices from a foreign (monkey) species. Here we investigated whether people learn to be so good at human voices through a lot of experience with human voices, or whether we are simply born better at processing human voices. The purpose of Study 1 was to test whether specialization for human voices develops during infancy. Six- and twelve-month-old infants came to the lab, and were seated on their parent's lap facing the researcher. The infants listened to the voice of one human or monkey female and were taught to turn their head to the side every time the voice of a second female of the same species was presented. Correct head turns were rewarded with a dancing toy! Using this method we found that infants of both ages turned their heads more often when there was a change in speaker than when there was no change, indicating that they were able to tell the difference between the two speakers for both monkey and human voices. However, 12-month-olds showed better discrimination for human compared to monkey voices, while 6-month-olds were equally good at both species. This shows that between 6 and 12 months of age infants become increasingly specialized for discriminating voices in their own species. This specialization for human voices likely occurs because infants experience many human voices in their daily environment, but no monkey voices. In Study 2, we tested whether experience is driving the effects we saw in the first study. So we gave 12-month-old infants experience with monkey voices, and then repeated the discrimination tests of Study 1. Specifically, we gave parents a book and CD in the form of a narrated storybook called "Beach Day for the Monkey Family" in which infants heard the monkey voices of a "father", "mother", "sister" and "brother" monkey. We found that these infants were better at telling apart new monkey voices than the infants in Study 1. This result indicates that older infants lose the ability to tell apart monkey voices because they are not exposed to these voices in their environment, and that by providing this exposure, their ability to discriminate monkey voices can be recovered. Discrimination of people by their voices is important for social interaction, recognizing familiar people and forming friendships. Together, these two studies show us that development of good discrimination for human voices depends on experience hearing human voices. To hear some sound clips from the experiment, click here.

Can 2-month-olds tell where a sound is coming from?

Sound localization refers to the auditory system's ability to use differences between the two ears in the loudness and timing of a sound in order to determine where in space the sound is coming from. For example, a sound in front of you will reach the two ears at the same time, and be equally loud in both ears, but a sound coming from the right will reach the right ear before the left ear and sound louder in the right ear than in the left ear. This ability provides listeners with useful spatial information, helping to direct attention to important sounding objects, such as someone talking, or oncoming traffic. Adults are very skilled at figuring out the location of a sound. Previously we have used the event-related potential (ERP) technique with EEG data to measure brain activity from 5, 8, and 12-month-old infants in response to changes in sound location. These data revealed that infants 5 to 12 months of age respond to the change in location with both an immature brain response (not seen in adults) and an adult-like mature brain response. In the current study, our results show that 2-month-olds respond with the immature brain response but do not show the mature response. This indicates that 2-month-olds can process sound location to some extent, but that that adult-like processing of sound location does not begin until after 2 months of age. Future studies will look at infants between 2 and 5 months of age and use very small changes in sound location to determine whether the emergence of the adult-like response is associated with better sound localization abilities.

Active music classes in infancy enhance musical, communicative and social development

Previous studies suggest that musical training in children can positively affect various aspects of development. However, it remains unknown as to how early in development musical experience can have an effect, the nature of any such effects, and whether different types of music experience affect development differently. We found that random assignment to 6 months of active participatory musical experience beginning at 6 months of age accelerates acquisition of culture-specific knowledge of Western tonality in comparison to a similar amount of passive exposure to music. Furthermore, infants assigned to the active musical experience showed superior development of prelinguistic communicative gestures and social behaviour compared to infants assigned to the passive musical experience. These results indicate that (1) infants can engage in meaningful musical training when appropriate pedagogical approaches are used, (2) active musical participation in infancy enhances culture-specific musical acquisition, and (3) active musical participation in infancy impacts social and communication development.

Previous studies suggest that musical training in children can positively affect various aspects of development. However, it remains unknown as to how early in development musical experience can have an effect, the nature of any such effects, and whether different types of music experience affect development differently. We found that random assignment to 6 months of active participatory musical experience beginning at 6 months of age accelerates acquisition of culture-specific knowledge of Western tonality in comparison to a similar amount of passive exposure to music. Furthermore, infants assigned to the active musical experience showed superior development of prelinguistic communicative gestures and social behaviour compared to infants assigned to the passive musical experience. These results indicate that (1) infants can engage in meaningful musical training when appropriate pedagogical approaches are used, (2) active musical participation in infancy enhances culture-specific musical acquisition, and (3) active musical participation in infancy impacts social and communication development.

The benefits of music lessons

Music lessons can be a fun and engaging way to stimulate the minds of children and help them learn a skill that they can enjoy throughout their lives. But can participating in music training also benefit children in other areas of their education and development? Media reports have certainly been full of claims that music lessons can improve children's math and language skills, and make them smarter in general. However, science has yet to conclusively demonstrate whether these claims are justified. Past research suggests that music training may affect a wide variety of different skills, including reasoning abilities, language development, and academic competence. If extensive music training is associated with such widespread benefits, we reasoned that its greatest effects might be observed for more basic skills like memory, attention, and reading ability. These types of skills could have a broad impact on general cognitive skills and school performance. To test this question, we studied a group of 6- to 9-year-old music students who had taken music lessons for varying lengths of time. We examined whether the longer children had been in music lessons, the better they performed on a variety of cognitive tests. Our results suggested that music training was associated with two specific skills, memory and reading comprehension, rather than cognitive skills in general. These findings are exciting because improving memory and reading may form a foundation for improving many other important skills, such as acquiring general knowledge about the world, following directions or instructions, and reasoning through problems. Interestingly, we found that a particular aspect of attention that allows us to focus on one thing while ignoring distractions (e.g., saying that the centre fish is pointing left while ignoring the other fish pointing right, above) was not associated with music training. This result suggests that music lessons do not have immediate benefits for all cognitive skills. At this point we don't know whether it takes more training to see effects of music on attention, or whether musical training is simply not related to attention. Further research is needed for us to answer this question. But we can conclude that musical training does have benefits for memory and reading comprehension. This important research from last year was recently published in the journal "Music Perception".

The music babies hear changes their brain responses

We know from other research (some of it from our lab!) that experience changes the brain. But how much experience do you need in young infancy to see changes in the brain? In this study, two groups of 4-month-old infants listened to a CD of children's songs played on musical instruments for 20 minutes every day for one week. For one group, all songs were played in guitar timbre, and for the other group, all songs were played in marimba timbre. Timbre is the word we use to describe the difference in sound quality between different instruments, people, and objects. Timbre perception is extremely important in everyday listening; for example, to recognize individual voices and musical instruments. After a week of listening at home, we recorded each infant's brain activity in the lab using EEG as they listened to small pitch changes in both guitar and marimba timbre. As it turns out, the babies who heard guitar music showed larger brain responses to guitar tones, and the babies who heard marimba music showed larger brain responses to marimba tones! This difference is even more impressive because the specific musical notes they heard in the lab were different than the notes on the CD. This means that their learning of the timbre generalized to new notes they had not heard before in that timbre. The pattern of results shows us that even a short amount of exposure lasting a few minutes a day for one week can have measurable effects on infant brain responses. More and more, we are now learning about how brain representations can be shaped by particular experiences in infancy and childhood. This exciting research was recently published in the neuroscience journal "Brain Topography".

We know from other research (some of it from our lab!) that experience changes the brain. But how much experience do you need in young infancy to see changes in the brain? In this study, two groups of 4-month-old infants listened to a CD of children's songs played on musical instruments for 20 minutes every day for one week. For one group, all songs were played in guitar timbre, and for the other group, all songs were played in marimba timbre. Timbre is the word we use to describe the difference in sound quality between different instruments, people, and objects. Timbre perception is extremely important in everyday listening; for example, to recognize individual voices and musical instruments. After a week of listening at home, we recorded each infant's brain activity in the lab using EEG as they listened to small pitch changes in both guitar and marimba timbre. As it turns out, the babies who heard guitar music showed larger brain responses to guitar tones, and the babies who heard marimba music showed larger brain responses to marimba tones! This difference is even more impressive because the specific musical notes they heard in the lab were different than the notes on the CD. This means that their learning of the timbre generalized to new notes they had not heard before in that timbre. The pattern of results shows us that even a short amount of exposure lasting a few minutes a day for one week can have measurable effects on infant brain responses. More and more, we are now learning about how brain representations can be shaped by particular experiences in infancy and childhood. This exciting research was recently published in the neuroscience journal "Brain Topography".

Infants can organize the sounds in their world

In most natural environments there are many sounds occurring at the same time. The sound waves combine in the air and reach the ear as one complex waveform. The brain needs to figure out how many sounds are present and which parts of the complex waveform belong to each sound source in the environment. Adults are very good at doing this. For example, if adults are at a busy party with loud music and many people talking, they have little problem separating the different voices from each other and from the music. We wanted to understand whether infants also have this ability to organize sounds occurring at the same time into different auditory objects. Over the past 3 years we have run a number of studies testing how well infants can separate sounds in their environment. In study 1 we tested 6-month-old infants using our conditioned head-turn setup. We used one complex tone with multiple harmonics at integer multiples of the fundamental frequency. Infants heard this tone repeating and every once in a while we mistuned one of the harmonics. For adults, the in-tune complex tone is heard as one sound, but mistuning one harmonic causes that harmonic to be heard as a separate, second sound in addition to the complex tone. At 6 months, infants turned their head (in order see the toys light up) on occasional presentations of the sound with the mistuned harmonic, indicating that they can hear two tones at once. This important study will soon be published in the Journal for the Acoustical Society of America. In study 2 we used EEG to study whether 2, 4, 6, 8, 10, and 12-month-olds can perceive two auditory objects at once. Thank you to all those babies who wore our EEG cap and listened to our 1-tone and 2-tone sounds! We have tested over 140 infants between 2 and 12 months of age to determine if the infant brain can tell the difference between when it is hearing one sound compared to two. In adults and older children we know that there is a characteristic brain response to hearing two sounds. We used EEG to study whether or not infants also show this brain response. At 2 months of age we did not see different brain responses between one verses two sounds. However, we found that as early as 4 months of age, infants show these different responses. This tells us that by 4 months they can separate two sounds that are happening at the same time. In study 3 we used EEG to study 4-year-olds. Although the 4- to 12-month-olds showed different brain responses to one versus two sounds, these responses were different from those of adults. We are now testing 4-year-olds to determine when the brain begins to show mature adult-like responses to hearing two sounds at once. In Study 4 were studying how infants Integrate sound and vision to perceive objects. In the everyday world, we get auditory and visual information about objects, and we integrate this information together. For example, the speech we hear from a person is integrated with the mouth movements we see. We are testing this integration now. Specifically, we want to know whether 4-month-old infants prefer to look at one bouncing ball when they hear the sound of one ball and two bouncing balls when they hear two balls. This study will help us understand if infants know that one sound should be coming from one object and two sounds from two objects.

LIVELab Studies

What does musical group flow really feel like?

Being in “flow”—being pleasurably absorbed in a task that is challenging but manageable, to the point of losing track of time and self, often described as being “in the zone” where everything seems to “click”—has been studied extensively in individuals. But flow can also occur in groups, through coordination, mutual responsiveness, and shared awareness during a task. Music performance may create a special kind of group flow that relies on continuous mutual prediction to keep musicians’ actions aligned in time. To understand the experience of musical group flow, we first collected written descriptions of 85 musicians’ peak group musical experiences and identified the main themes using thematic analysis. The main themes were: group cohesion, collective effort, mutual enjoyment, fluid interaction, automaticity, holistic awareness, and altered state of consciousness. We then used these themes to create 71 survey items, which we gave to about 600 musicians. Exploratory factor analysis of their responses revealed a five-factor model that matched the themes from the qualitative analysis, providing empirical support for the idea that musical group flow is a unique group-level phenomenon that arises from smooth interpersonal coordination and creates a special mental state of mutual absorption, cohesion, and connection. This research helps explain why playing music with others—whether in professional groups or just with friends—can be so rewarding.

Being in “flow”—being pleasurably absorbed in a task that is challenging but manageable, to the point of losing track of time and self, often described as being “in the zone” where everything seems to “click”—has been studied extensively in individuals. But flow can also occur in groups, through coordination, mutual responsiveness, and shared awareness during a task. Music performance may create a special kind of group flow that relies on continuous mutual prediction to keep musicians’ actions aligned in time. To understand the experience of musical group flow, we first collected written descriptions of 85 musicians’ peak group musical experiences and identified the main themes using thematic analysis. The main themes were: group cohesion, collective effort, mutual enjoyment, fluid interaction, automaticity, holistic awareness, and altered state of consciousness. We then used these themes to create 71 survey items, which we gave to about 600 musicians. Exploratory factor analysis of their responses revealed a five-factor model that matched the themes from the qualitative analysis, providing empirical support for the idea that musical group flow is a unique group-level phenomenon that arises from smooth interpersonal coordination and creates a special mental state of mutual absorption, cohesion, and connection. This research helps explain why playing music with others—whether in professional groups or just with friends—can be so rewarding.

PhD student researcher: Luc Klein

Low bass drives audience movement

One factor that influences how we move to music is the amount of bass, or low-frequency sounds, in the music. In previous experiments, we have found that lower-pitched notes trigger quicker brain responses and enhance movement timing. However, we had not yet tested whether bass directly causes increased movement—that is, if adding more bass at a concert actually makes people dance more. In a unique concert experiment at the LIVELab, we altered the music to include extra-low bass while measuring audience movement. During a performance by the renowned electronic music duo Orphx, we used special very-low frequency (VLF) speakers to add subtle VLF sounds to the music. We turned the VLF speakers on and off every 2.5 minutes and used motion capture to track how the audience moved. Even though the added low bass was so quiet that it could not be consciously detected, audience members moved more when it was present. We are now following up on this result to understand the physiological mechanisms that might explain why bass increases movement.

One factor that influences how we move to music is the amount of bass, or low-frequency sounds, in the music. In previous experiments, we have found that lower-pitched notes trigger quicker brain responses and enhance movement timing. However, we had not yet tested whether bass directly causes increased movement—that is, if adding more bass at a concert actually makes people dance more. In a unique concert experiment at the LIVELab, we altered the music to include extra-low bass while measuring audience movement. During a performance by the renowned electronic music duo Orphx, we used special very-low frequency (VLF) speakers to add subtle VLF sounds to the music. We turned the VLF speakers on and off every 2.5 minutes and used motion capture to track how the audience moved. Even though the added low bass was so quiet that it could not be consciously detected, audience members moved more when it was present. We are now following up on this result to understand the physiological mechanisms that might explain why bass increases movement.

Postdoc research: Daniel Cameron

Cameron, D. J., Dotov, D., Flaten, E., Bosnyak, D., Hove, M. J., & Trainor, L. J. (2022). Undetectable very-low frequency sound increases dancing at a live concert. Current Biology, 32(21), R1222–R1223. https://doi.org/10.1016/j.cub.2022.09.035

Dance with many, dance with one: Optimizing learning to dance

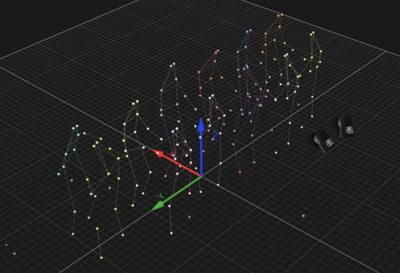

Improvised partner dancing is a joyful activity that allows people to meet new friends, exercise, improve memory and coordination, and build self-confidence. In this activity, two people improvise and coordinate dance steps together. To do this, it is necessary to learn how to give and receive cues through physical contact, such as holding hands or an arm-to-shoulder embrace. This learning mostly happens through practice and trial and error. In group dance lessons, people can either switch partners during learning or keep the same partner. We wondered which approach is more effective for learning the non-verbal communication needed for coordination. We invited participants to learn a simple partnered dance exercise at the LIVELab. Untrained participants were paired with trained dancers to perform the exercise as synchronously as possible while holding hands. One group switched partners often, while the other always danced with the same person. At the end of the experiment, we measured how synchronized the couples in each group were to see which strategy produced better dancers. To measure synchronization, we recorded the dancers’ movements using motion capture, which allows us to measure the position of different body parts (e.g., head, shoulders, elbows, and feet) with high accuracy. Our results show that people who danced repeatedly with the same partner learned to synchronize better, even when tested with partners they had never danced with before. This suggests that, at least in the early stages of learning to dance with a partner, sticking with the same person may be more effective. Further studies are needed to see if this advantage continues at more advanced stages of training, when flexibility might become more important.

Improvised partner dancing is a joyful activity that allows people to meet new friends, exercise, improve memory and coordination, and build self-confidence. In this activity, two people improvise and coordinate dance steps together. To do this, it is necessary to learn how to give and receive cues through physical contact, such as holding hands or an arm-to-shoulder embrace. This learning mostly happens through practice and trial and error. In group dance lessons, people can either switch partners during learning or keep the same partner. We wondered which approach is more effective for learning the non-verbal communication needed for coordination. We invited participants to learn a simple partnered dance exercise at the LIVELab. Untrained participants were paired with trained dancers to perform the exercise as synchronously as possible while holding hands. One group switched partners often, while the other always danced with the same person. At the end of the experiment, we measured how synchronized the couples in each group were to see which strategy produced better dancers. To measure synchronization, we recorded the dancers’ movements using motion capture, which allows us to measure the position of different body parts (e.g., head, shoulders, elbows, and feet) with high accuracy. Our results show that people who danced repeatedly with the same partner learned to synchronize better, even when tested with partners they had never danced with before. This suggests that, at least in the early stages of learning to dance with a partner, sticking with the same person may be more effective. Further studies are needed to see if this advantage continues at more advanced stages of training, when flexibility might become more important.

How do different people experience live classical music?

Listening to music is a favorite activity for mood regulation, relaxation, and enjoyment. With the rise of personal music devices, music listening can now be experienced alone. Yet, people still love live concerts and rate them among their most memorable experiences. To understand why, we measured 20 audience members’ brain, heart, and skin responses at the most expressive moments during a concert where two pianists from the Canadian Chopin Society performed in the LIVELab. After each piece, audience members also rated their enjoyment, emotional intensity, familiarity, and feelings of connection with the audience and the performer. Performers provided annotations of the most expressive sections of their performance. We also collected continuous ratings of valence (positive/negative emotion) and arousal (intensity) from a new group of participants who listened to the pieces online. Interestingly, the results so far are mixed—with different people showing different biological responses to the music at different times. We are now interested in what factors might influence how a person experiences classical music.

Postdoc research leader: Martin Miguel

How do musicians learn to synchronize during expressive performances?

When playing expressive music that can speed up and slow down, how do musicians stay together? If they wait to hear what another musician does, it will be too late to stay in sync, so we investigated whether one musicians can predict what another musician will play next based on what they have just played. We asked several professional violinists to play along with a very expressive recording of "Danny Boy" several times in a row while we recorded their playing. We then used a mathematical technique called Granger causality to determine how much the recording influenced the violinists’ upcoming playing. We found that, at each moment during the piece, our violinists were influenced by how the recording had just sounded. Furthermore, as they played with the recording multiple times, the violinists became more and more synchronized with it, and relied less on predicting from what they had just heard. In other words, they built an “internal model” or detailed memory of the musical intentions of the violinist on the recording, which helped them coordinate ([So learning to play music with others is an amazing, complicated process involving prediction and building internal memories]).

When playing expressive music that can speed up and slow down, how do musicians stay together? If they wait to hear what another musician does, it will be too late to stay in sync, so we investigated whether one musicians can predict what another musician will play next based on what they have just played. We asked several professional violinists to play along with a very expressive recording of "Danny Boy" several times in a row while we recorded their playing. We then used a mathematical technique called Granger causality to determine how much the recording influenced the violinists’ upcoming playing. We found that, at each moment during the piece, our violinists were influenced by how the recording had just sounded. Furthermore, as they played with the recording multiple times, the violinists became more and more synchronized with it, and relied less on predicting from what they had just heard. In other words, they built an “internal model” or detailed memory of the musical intentions of the violinist on the recording, which helped them coordinate ([So learning to play music with others is an amazing, complicated process involving prediction and building internal memories]).

PhD student investigator: Luc Klein

Klein, L., Wood, E., Bosnyak, D., & Trainor, L.J. (2022). Follow the sound of my violin: Granger causality reflects information flow in sound. Frontiers in Human Neuroscience, 16, 982177. Doi: https://doi.org/10.3389/fnhum.2022.982177

Making music together: Incredible coordination!

In order to play a musical instrument, the musician’s brain needs to anticipate what wants to play next in order to have time to plan and execute the motor movements to play the next notes. When that musician plays in, for example, a string quartet or a jazz trio with other musicians, there is an added challenge. The musician also has to coordinate with the other musicians – keep in sync, phrase together, and match each other’s musical styles. If each musician waits to hear what each other are doing, it will be too late to be with together with each other! So musicians not only have to anticipate how they are going to play next, they also have to anticipate or predict how their fellow musicians are going to play next. We have been studying how they do this. In one study, we used motion capture equipment in the LIVELab to measure the body sway of each musician. Just like how people gesture with their hands when they talk, and this helps them to speak fluently, musicians sway their bodies when they play music, and we think this helps them to plan the “big picture” of how they want to play the music. We showed, using a mathematical technique called Granger Causality, that from the body movements of one musician at one point in time, we could predict how another musician playing with them was going to move at the next point in time. In other words, through their body sway we could measure communication between the musicians! We found that musicians assigned as “leaders” influenced other musicians in the group more than those assigned as “followers”. In a second study, we found that the higher the communication among all the musicians, as measured by body sway coupling, the listeners rated the quality of the performance. So good communication through body sway leads to great music! We believe that these coordination dynamics that we are uncovering between musicians also apply to many other situations in which people need to coordinate their actions.

Chang, A, Kragness, H, Livingstone, S, Bosnyak, D, and Trainor, L (2019). Body sway reflects joint emotional expression in music ensemble performance. Scientific Reports, 9(205) View Abstract Full text PDF

Baby Opera!

We all know there's something special about a live concert, especially now when we can’t go to live concerts! Whether it's a famous singer or a guitarist at your favourite local restaurant, seeing a musician performing right in front of us enhances our experience of the music compared to hearing it on a recording. In this study, researchers from the University of Toronto Scarborough (and former PHD graduates from our Auditory Development Lab, Drs. Laura Cirelli and Haley Kragness) teamed up again with us to learn how babies experience an audiovisual recording of a concert compared to the real thing. Groups of 25-30 babies came to McMaster’s unique LIVELab research-concert hall (https://livelab.mcmaster.ca/) to watch a new musical show called "The Music Box" composed by Toronto artist Bryna Berezowska, either live or projected to the big screen. We recorded the babies’ reactions, as well as their physiological responses, like heart rate. It made for quite a lively event, and some of it was captured on this CHCH news clip feature (https://www.chch.com/mcmaster-university-teams-up-with-musicians-to-create-opera-for-babies/) The results of the study will help us better understand the role of live performance in our emotional and physiological reactions to music.

Analyzing attraction in speed dating: Turning the LIVELab into the LOVELab!

Social bonding is fundamental to human society, and romantic interest involves an important type of bonding. The role of nonverbal interaction has been little studied in initial romantic interest, despite being commonly viewed as a crucial factor. Speed dating is an excellent situation for investigating initial romantic interest in real-world settings, as involves a common real-world activity while at the same time allowing us to have high experimental control. We conducted a real speed dating event in the LIVELab while we measured the body sway of the participants using motion capture. The results showed that people’s long-term romantic interest can be predicted by nonverbal interactive body sway communication, above and beyond their ratings of physical attractiveness. In addition, we found that the presence of groovy background music during speed dates promoted interest in meeting a dating partner again. This novel approach to measuring non-verbal communication could potentially be applied to investigate nonverbal aspects of social bonding in other dynamic interpersonal interactions such as between infants and parents and in nonverbal populations including those with verbal communication disorders.

Chang, A, Kragness, HE, Tsou, W, Bosnyak, DJ, Thiede, A, and Trainor, LJ (2021). Body sway predicts romantic interest in speed dating. Social Cognitive and Affective Neuroscience, 16(1-2), 185-192 View Abstract Information/Download

https://www.chch.com/speed-dating-study/

Wellness and Music

Music and managing stress levels in undergraduate students

Stress levels among undergraduate students appear to be rising, and the pandemic may have exacerbated that trend. We conducted a survey during exam period in April 2020 while the COVID-19 precautionary measures were in effect. The survey collected information about students’ music background, anxiety and extra-curricular activities. We were particularly interested in knowing if playing an instrument or listening to music contributed to overall well-being (feeling less stress or anxious), during a period of time perceived as stressful (exams and a global pandemic).

786 undergraduate students completed the survey. Preliminary results indicate 65% of students were experiencing high anxiety, which is worrying. We also found that listening to music was among the top extra-curricular activities that student reported engaging in to support their wellness. Other high rated activities were exercise and socializing with others through social media. When asked if they would be interested in engaging in online group music therapy, over half of students stated yes or maybe.

There are currently no music therapy programs for undergraduate students on Canadian university campuses that we are aware of. The results of this survey indicate that providing such programs could support student wellness in a proactive manner by intervening to manage stress before it reaches crisis levels. As a result, we are now conducting a study on how group music therapy affects physiological and self-report measures of stress. If you are an undergraduate student at McMaster who would like to participate, please contact us at auditory@mcmaster.ca

Online Community Music Therapy- a proactive tool for reducing stress and anxiety

Verbal based therapies are the standard of care for mental health both on and off campus. Mental health concerns continue to rise, and undergraduate students are particularly vulnerable to compromised wellbeing. This research will explore the efficacy of community music therapy as a proactive approach to wellbeing. Many supports offered on campus to undergraduate students are designed to meet students in crisis opposed to preventing crisis, and there are often long wait times associated with supports for students in crisis. Additionally, reactive crisis support is offered as individual sessions (opposed to group sessions) which contributes to additional financial costs to the university in addition to the aforementioned wait times.

This research is designed to offer online group music therapy sessions, drawing upon the model of community music therapy. Data will be collected from two different online group music therapy sessions (1.Active music therapy interventions 2. Passive music therapy sessions) as well as the online Open Circle groups, which is a verbal based support group available to McMaster students. In addition to the online community music therapy group, data will be collected from a control group (students who do not participate in either group) and a wait-listed group. We will collect data about state anxiety, perceived stress, quality of life, heart rate variability and cortisol levels.